Four steps for industry to lead the way in the responsible use of AI

In this blog, David Mudd, Global Head of Digital Trust Assurance, sets out four steps on how industry can shape a positive future for AI by building trust and confidence.

Great technology brings opportunity – along with questions around responsible use. According to EY[1], two-thirds of CEOs think AI is a force for good, but the same proportion say more work is needed to address the social, ethical and security risks. As BSI’s Trust in AI Poll[2] identified, there is public uncertainty about using AI. More than half of people worry AI risks exacerbating social divides.

In a diverse global economy, agreeing a common approach on AI may seem a tall order. Equally, AI is a complex ecosystem - no single organization owns all the pieces. But there is a golden opportunity for industry to be a constructive partner, leading the way in responsible use of AI and building societal trust by collaborating across markets and sectors. Here are four steps leaders can take.

1. Establish the right governance systems

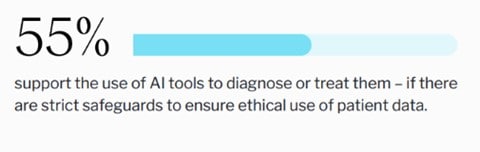

Ensuring AI is used ethically is integral to us realizing its benefits. We saw, for example, a majority of people support the use of AI tools to diagnose or treat them – with strict safeguards to ensure ethical use of patient data.

With AI’s capabilities developing fast[3], appropriate governance is key. Leaders can focus on establishing a culture of trusted and responsible use. A good starting point could be agreeing the intended outcomes and ROI, and the impacts on all stakeholder groups including the workforce. From there, organizations can put in place an effective governance process, such as the certifiable AI management system framework (ISO/IEC 42001).

Good governance involves understanding the overall business environment. Ultimately, AI has the potential to have a positive impact within an organization, provided its rollout is aligned to wider business strategy, risks are considered and the structure is in place to provide suitable oversight.

Establishing and maintaining the appropriate culture of trusted and responsible development and use of AI, in line with business strategy, can help forge a path for AI to be a force for good.

2. Consider built-in bias – from the beginning

Industry can also take the lead by being mindful of mitigating bias. Bias isn’t solely an “AI thing” – but there are concerns around things like whether AI search engines will offer gender-stereotyped results[4].

Crucially, the issue of bias starts with the process around it and the data sets being used[5]. It can come from team assumptions, errors and issues in the data or from the direction it’s steered from a technical learning point of view. So managing the AI development process from the start can help us get the most out of AI.

There is an opportunity for industry to take the lead, ensuring that those creating AI are asking the right questions, have diverse teams and avoid prioritizing one group over another. For those choosing an AI tool, it’s about due diligence. We might not be able to eliminate bias but by being wise to the guardrails needed, we can do our best to ensure AI is used fairly to benefit us all.

3. Start a conversation within your organization about AI

Using AI-based services carries an element of business risk, the level of which will depend on whether the organization is a passive user, creating AI services or how AI is going to be used. Responsible AI leadership means treating business risk as an opportunity – and communicating the mitigations that are in place.

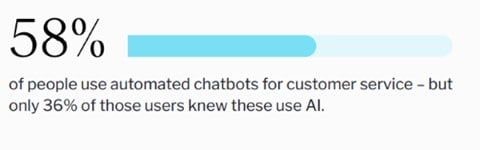

Changing language around AI from 'new and scary' to another aspect of business-as-usual that needs to be managed diligently by all could be helpful. Whether an organization is creating or using AI, it should be part of a clear business strategy where everyone understands the benefits. This is particularly pertinent when you consider that understanding of AI is low – for example, 62% of people are unaware tracking apps like Strava use AI. Tackling this knowledge gap is key.

4. Securing AI: trust and the digital vaccine

A crucial part of building trust in AI is trust that sensitive data will be protected and that the AI algorithm can’t be interfered with. That means security for the AI ecosystem is paramount. Organizations can build trust in their use of AI by making sure security measures are strong.

Trust is the cornerstone of technological advancement, so ensuring it’s prioritized through secure, ethical and responsible use and robust management can drive positive outcomes.

By 2030, nearly two-thirds of people globally expect their industry to use AI daily. AI is not a theory but a reality, set to transform every aspect of life and work. Leaders now have an incredible opportunity[6] to chart a course on the best use of AI, so it can be a true force for good.

This content is from BSI’s Shaping Society 5.0 campaign. Download David’s full essay here or access others in the collection here.

David Mudd, Global Head of Digital Trust Assurance, BSI

David is responsible for BSI’s Digital Trust Assurance solutions, including training, testing, assessing and certifying for Digital Governance and Risk Management, Cybersecurity and Privacy, Digital Supply Chain, Data Stewardship and Artificial Intelligence. He helps organizations to adopt disruptive digital technologies safely.

[1] The CEO Outlook Pulse – July 2023, EY, July 2023

[2] BSI partnered with Censuswide to survey 10,144 adults across nine markets (Australia, China, France, Germany, India, Japan, Netherlands, UK, and US) between 23rd and 29th August 2023

[3] AI has started to display ‘compounding exponential’ progress, founder of ChatGPT rival claims, Independent, February 2023

[4] Artificial Intelligence: examples of ethical dilemmas, UNESCO, April 2023

[5] Understanding algorithmic bias and how to build trust in AI, PWC, January 2022

[6] To Be a Responsible AI Leader, Focus on Being Responsible, MIT Sloan Management Review, September 2022